“The Uncanny Valley” is Flash Art’s new digital column offering a window on the developing field of artificial intelligence and its relationship to contemporary art. The last decade has seen exponential growth in the aesthetic application of AI and machine learning: from DeepDream’s convolutional neural networks that detect and intensify patterns within individual images; to NST (neural style transfer) techniques that manipulate one image into the style of another; to GANs (generative adversarial networks) that digest large datasets of images in order to generate new visions without human intervention.

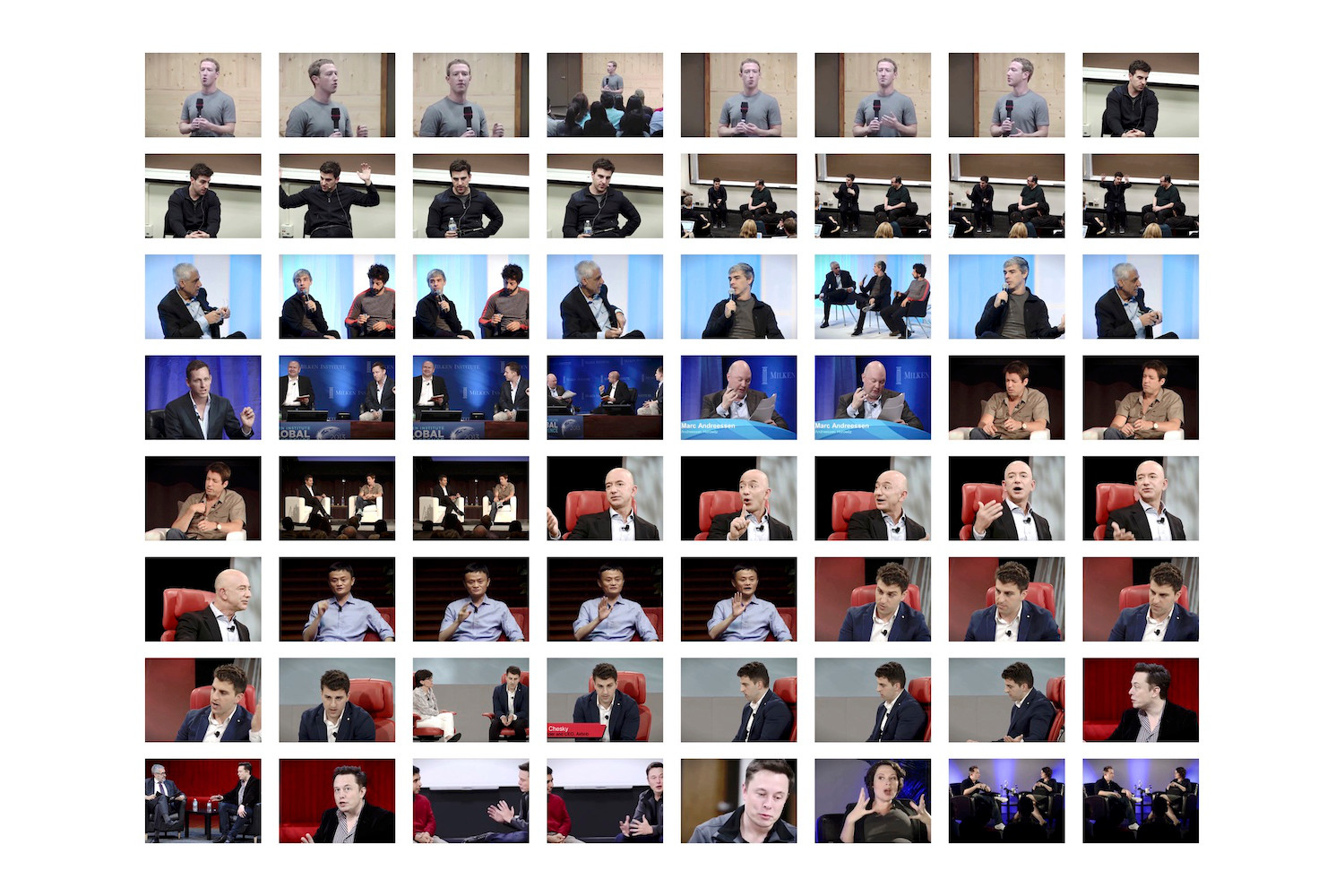

Although the community of computational artists and creative AI hackers still exists largely outside of the contemporary art scene, a growing body of artists has sought to traverse both territories, in the process foregrounding the cultural, ethical, and social problems that underpin our new digital architecture. In recent years, Jake Elwes has distilled the full range of AI-informed strategies into a diverse series of outputs: transcriptions of tech leaders’ numerical babblings (dada da ta, 2016); video installations projecting conversations between two neural networks (Closed Loop, 2017); and 2016’s Auto-Encoded Buddha — a tribute to Nam June Paik’s TV Buddha (1974) — in which a computer struggles to depict the Buddha’s true essence. Through these works and others, Elwes has actively positioned himself within the long histories of video and computer art, and against the notion that AI is capable of expressing intentionality.

Alex Estorick and Beth Jochim spoke to him upon the release of his latest incarnation of “The Zizi Project,” a collection of works that explores the intersection of artificial intelligence and drag performance.

BJ: Despite being trained in fine art, you now incorporate machine learning into your artistic practice. What motivated you in pursuing this path?

JE: While I do have a traditional training from the Slade School of Fine Art, I was always interested in pushing the boundaries of media art and working with systems and technologies. During my time there, I was one of the few people using computer code as my medium, although there were some excellent new media and conceptual artists who pushed me to consider what I was doing beyond the technology involved. In 2016, I discovered the potential of machine learning at the School of Machines, Making & Make-Believe in Berlin after being introduced to it by the artist and educator Gene Kogan. Having worked with creative software and coding for many years, I was blown away by the conceptual underpinnings of machine learning — the technical aspect and its philosophical and political implications. Since then, a major theme in my work has been the investigation of machine-learning techniques in order to critically and poetically examine the field of artificial intelligence.

AE: Your works up to this point are striking for their heterogeneity, as though working with AI is a process of critiquing biases and traditions of digital representation by adopting only the most appropriate approach to a given problem. How would you define the envelope within which you work?

JE: As a process of creative enquiry and personal agency. Machine learning creates systems which reflect the biases of the people who build them and the networks they’re a part of. However, these same techniques also allow us to reflect on and illustrate the problems in how the machine-learning models were trained, and by extension the problems that need addressing in our material world and social structures. In my current work, I’m trying to use machine-learning systems to reflect back their flaws and capabilities, for instance the limits of standardized public datasets and the state-of-the-art computer vision algorithms that are used increasingly by government agencies and private companies. In these works, I’m interested in appropriating as well as exemplifying existing datasets and techniques. However, I’m also interested in how creating our own data and systems can empower us to consider both what’s included and whose identities are represented, while also demystifying the AI research field.

AE: Your work with Roland Arnoldt, Auto-Encoded Buddha (2016), pays tribute to Nam June Paik. This implies that you are conscious of working within a long lineage of artists exploring the relationship between art and technology. However, your strategies seem to confront largely contemporary problems. How useful is it to speak of genealogies in this field?

JE: Well, I suppose you can do both. Today we have a different set of issues facing us, which we can’t overlook when making work with and about new technology. Nam June Paik himself was fascinated by the technological potential of his own time, and also prophesied a future defined by an internet. I’m mindful of how artists in the past have considered this field, but their processes were also defined by a nascent technology that has grown exponentially since then. Exploring the world through art remains a continuous process even if the means of exploration and issues involved have changed radically. I can only hope that what I make now may have something to say in the future, but who really knows?

AE: Harold Cohen’s early program, AARON, was to a great extent an extension of his career as a painter, which followed a highly modernist logic. Are you conscious of your art returning to the same problems of codes, languages, and media that often characterized modernist debate? Or do you find this to be an unhelpful analogy?

JE: Absolutely, I think it’s important for artists working with technology not to create in a void. The work of many of my favorite artists — John Cage, John Smith, and Nam June Paik for instance — derives out of conceptualism or structuralism. Harold Cohen was in fact an alumnus of the Slade and started many of the conversations surrounding computer art, and about agency and creativity when using computer code. Although the results are at times poetic and beautiful to look at, some of the systems art and generative art that this led into reflects a very cold and disconnected way of making work. I’m also aware that this is quite a white male canon, as it undoubtedly was in the early days of computer art and conceptual art. Today there are many more voices and perspectives which have influenced me, from digital media and queer theorists such as Hito Steyerl, James Bridle, and Zach Blas, to artists researching systemic bias in technology such as Joy Buolamwini, Libby Heaney, and Kate Crawford.

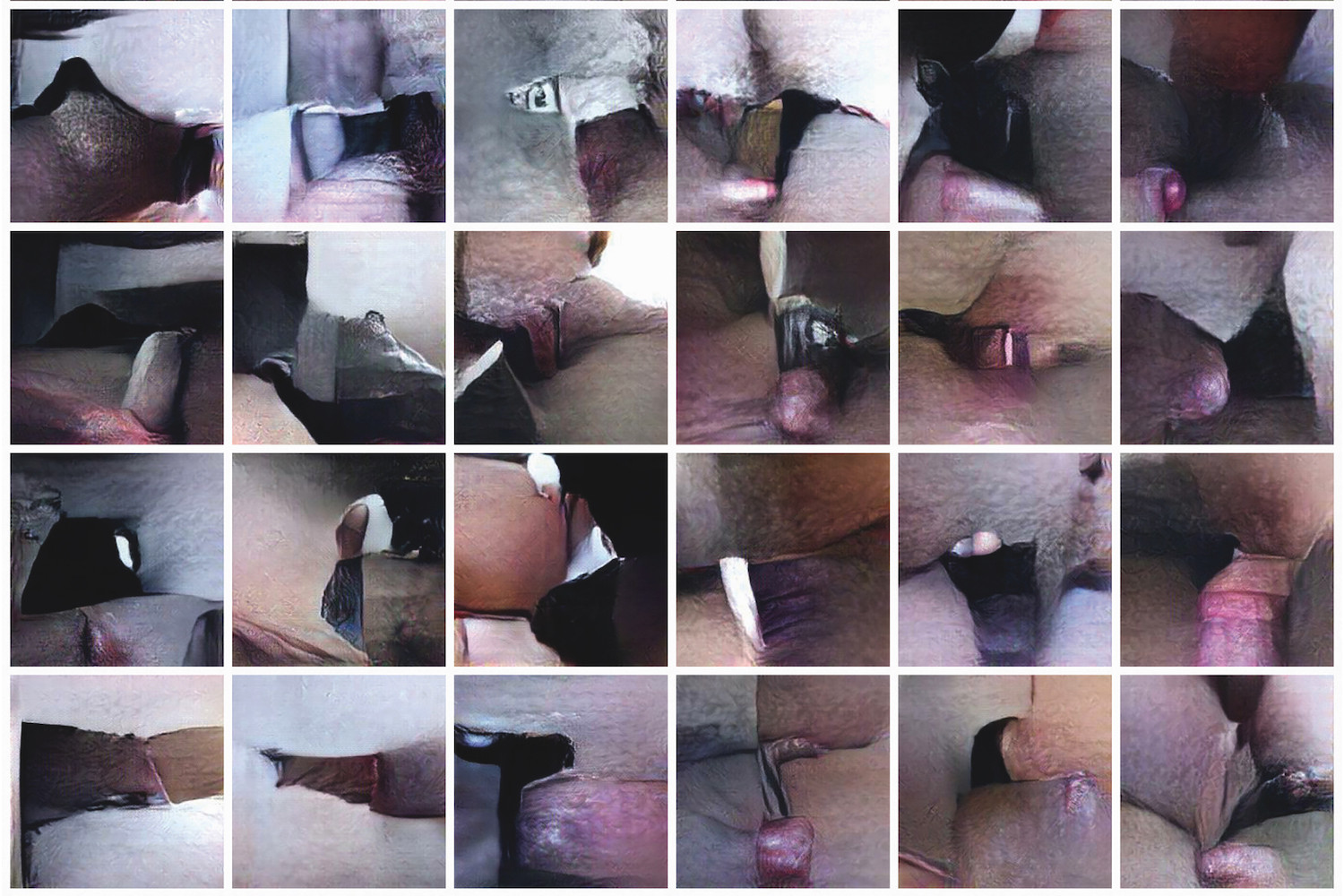

Perhaps initially — like the early computer artists — I wanted to create work that challenged the notion of artistic agency when using black-box machine-learning technology as your process. The convolutional neural networks I was using are capable of creating emergence in a far more complex way than Marcel Duchamp, John Cage, or Harold Cohen could have imagined. This was my focus for Closed Loop (2017), a system whereby the audience could watch two machine-learning models in conversation: one interpreting in words, the other interpreting with generated images. I never controlled or curated the outputs, and it was fascinating to see the two networks branching off and misinterpreting each other. Through my removal of human agency I wanted to challenge what it meant for a computer to make a creative move, and by extension for the system to “think” or “dream.” However, I also realize that this sort of philosophical enquiry can be dangerously misleading for the audience, further mystifying an already misunderstood and anthropomorphized reading of artificial intelligence. In Closed Loop, the mistakes and misunderstandings of the network were due to the limitations in its training, and at no point was the system truly autonomous. Humans built the system, chose the parameters and the datasets to feed it, and displayed the output. But works like Machine Learning Porn (2016), Dada da ta (2016), and “The Zizi Project” (2019–ongoing) also look to revive poetry and humor, and give more prominence to social issues.

AE: A work like Zizi – Queering the Dataset (2019) not only calls into question the binary gendering of training datasets but also the insidious nature of deepfake technology. Do you think terms like “realism” and “abstraction” are still relevant to the discourse surrounding art and AI or are they outdated?

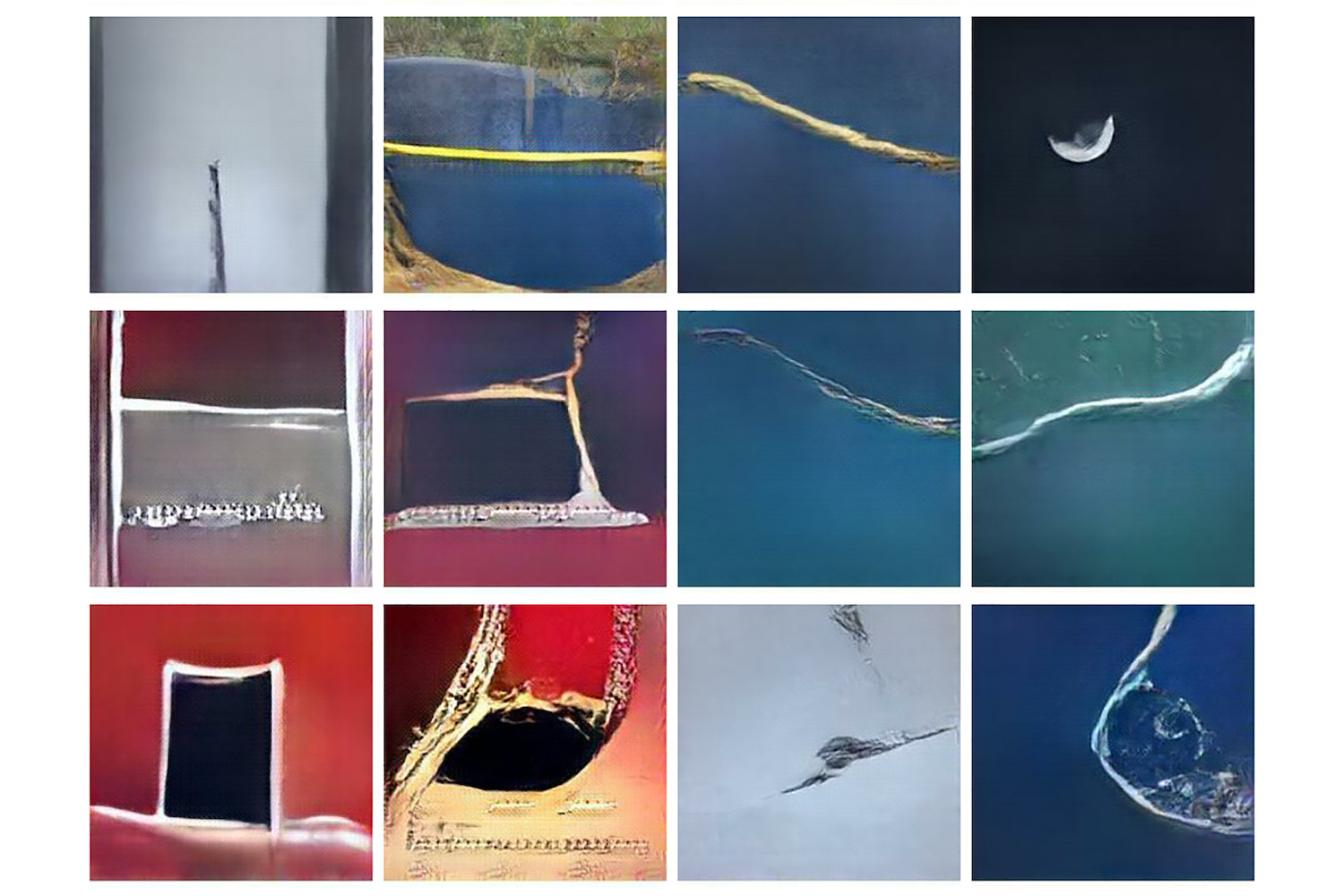

JE: I think realism and abstraction are useful concepts for talking about imagery generated by artificial intelligence, but it also needs some unpacking. I don’t find it so useful when talking about my deepfake drag character Zizi, as that work for me is more to do with analyzing bias and the limitations of datasets and deepfake training. My work Latent Space (2019), however, plays on the idea of what it means for a machine-learning model to create “abstraction” in a more conventional sense. I took a model built to generate hyperrealistic fake images, which had been trained on ImageNet (a public dataset containing fourteen million images of all sorts of things). Instead of making it create the most realistic image it could of any one object — as it was built to do — I created a video of it floating between the coordinates that it had absorbed without aiming for representation. Having learned the colors and basic compositions from the photographic dataset, the results did bear striking similarities to abstract expressionism, though they were still derived from the human inputs. Perhaps abstract painters are, in reality, doing something similar. Only they are reflecting on a much larger dataset of visual material, memories, and sensations.

Zizi – Queering the Dataset was created by queering an existing machine-learning model for creating faces, whose dataset of real faces was largely homogenous and contained a significant Western bias. My idea was to inject this dataset with a thousand images of drag kings and queens as a way of challenging gender and exploring otherness. This caused the network to generate queerer, more ambiguous and more fluid faces and identities. I am fascinated by what happens when these faces start to break down completely, as if the inclusion of drag has broken the system. I suppose you could argue that this is a form of abstraction.

BJ: In Zizi & Me (2020) you open the black box to understand how AI systems work. In particular you manipulate the original dataset and use deepfake technology to create the world’s first deepfake drag queen. Can you discuss your findings and why you have chosen to convey your message through a cabaret performance?

JE: Zizi & Me is a double act between the internationally acclaimed drag queen “Me” and a deepfake clone of “Me.” We aim to use cabaret as a fun, accessible, and entertaining way to explore and challenge narratives surrounding AI and society. In the video preview we used the musical theater classic “Anything You Can Do (I Can Do Better)” (1946) as a tongue-in-cheek way of commenting on job automation, with AI certainly not replacing the role of a drag queen any time soon.