“The Uncanny Valley” is Flash Art’s new digital column offering a window on the developing field of artificial intelligence and its relationship to contemporary art.

It has been suggested that our consciousness allows us to be aware of 1) not just the reality of three dimensional objects, but 2) the potential of language, and 3) the potential of art, which can unlock and display information about that fourth dimension, patterns that exist but cannot be perceived through the direct input of our senses.

— from Pharmako AI by K Allado-McDowell and GPT-3

Sooner or later, we all become Fox Mulder from The X-Files. We want to believe; perhaps this tendency manifests itself in a conventional way: religion, marriage, financial speculation. Sometimes it is more esoteric: chemtrails, QAnon, ethical capitalism. AI falls somewhere on this spectrum. There are true believers who scrupulously pore over every emanation from GPT-3 for the latest “proof” of the advance of machinic intelligence, to deep skeptics — my culture, I suppose — who are universally recognizable by a particular genetic tic: our eyes instinctively roll when we encounter the words “advance” and “AI” in the same sentence. It’s not that my people don’t believe computation is advancing rapidly and its capacity to simulate, emulate, and replace human activities is becoming more and more sophisticated. It’s that there’s an overwhelming problem, a problem of power. Power always derives from belief.

What if I told you there was a machine that could write a poem? What if I told you there was a machine that could make a painting? What if I told you there was a machine that could write a symphony? Well? What if I told you there were people in charge of packaging these ideas and then selling them back to everyone else for venture capital? Answers may depend on what your preexisting beliefs are. Value is a notoriously individualized concept. There are, after all, plenty of human beings who can’t write a symphony, yet almost no one denies that they are conscious. Art is considered a kind of crucible for defining the boundaries of AI. If a machine can make an artwork that moves people, how dare we not say it has the same, or greater, consciousness than a human? You could ask the same question about sunsets. The moved mind matters more than the mind of the mover.

The philosophical substrata of the strong AI/weak AI/no AI debate are turned over with seasonal regularity. The essential arguments vary, from AI is possible, even inevitable, to AI isn’t possible, and talking about AI amounts to a kind of auto-gaslighting. Sometimes matters take an interesting if ridiculous turn, as when someone like Daniel Dennett suggests that human consciousness itself doesn’t really exist. At other times, we revisit the same ground: we are speaking Chinese without knowing it, as in John Searle’s famous thought experiment in which a person locked in a room facilitates communication between two people outside the room via a system of symbols that turn out, unbeknownst to the person inside the room, to be Chinese characters. We are trying to find out if a computer can carry on a conversation as boringly as a human. It is worth remembering the origin of Alan Turing’s “imitation game” at moments like these: contestants were trying to convince audiences that they were of a different gender than they appeared to be. In light of how much has been revealed about the complexities of gender, it is unsurprising that Turing himself considered the question of whether machines could think too silly to pose meaningfully. And yet it does get posed. One might pause to ask why. It cannot simply be a technological question. There are vastly simpler ways that technology might improve the world. The question of AI is inherently existential, as many of the films based on AI themes more clearly illustrate than much of the scientific discourse. Melvin Kranzberg’s famous dictum, “Technology is neither good nor bad, nor is it neutral,” cuts to the core of the problem: if machines can be intelligent they will enter the sphere of power and responsibility.

As with the question of gender, it is when these power dynamics are unveiled that the AI discourse becomes most urgent. What happens if there is a collective acceptance that some form of AI exists? If one takes Searle’s position, then we can never truly know if an AI is experiencing the world in a way that “is” consciousness. But where the lack of knowledge creates a space, belief rushes to fill it. A key tenet of the strong AI position is that if a simulation of human consciousness is good enough, then the ontological question of what consciousness qua consciousness is becomes irrelevant. While I agree with Searle on ontology, I also agree with the strong AI camp on the practicalities. If people believe an AI is conscious in the way human consciousness is spoken of, it doesn’t really matter whether it is or not.

Recognition of this dynamic is crucial in a social and political context in which transnational corporations are all racing to produce some form of proprietary AI entity. The capacity to critique AI discourse is in a dramatic state of flux. Historically, technical knowledge has made AI creation the province of computer scientists and advanced mathematicians. An increasing level of technical literacy among the general population is changing this aspect of information asymmetry, but many dangerous hierarchies have already been created that will be difficult to dismantle if AI is to have anything other than a tyrannical relationship with humans. In his short book titled Words Made Flesh: Code, Culture, and Imagination (2005), Florian Cramer discussed the pre-digital history of executable codes and programs, noting that “any code is loaded with meaning,” some being more intelligible than others. Meanings and values change over time, but only in relation to power. If code and its creations are the sole property of private tyrannies like corporations, the capacity of any AI entities — should they come to exist — to enhance the liberatory aspect of human experience is more fantastical than anything Philip K. Dick ever contrived.

The brutality of machinic cognition and algorithmic processes are already bleakly apparent, especially in the vast complex of oppression that is the US prison system. Writing in 2016 for the investigative journalism website ProPublica, Julia Angwin and her co-writers examined an algorithm-driven system called COMPAS used to make decisions about the worthiness of parole applications. Their report found that Black defendants were considered by the algorithm to be at disproportionally higher risk of recidivism than white prisoners, with the result that their parole applications were rejected at preposterously unjust rates — no doubt reinforcing the algorithm’s biased data set for the next generation. As data-driven AI systems control more and more political and economic space, one can easily imagine a world in which difficult or incendiary political decisions rely on biased AI systems for adjudication owing to their purported “objectivity.” The advent, under the Bush administration, of “signature strikes” by lethal drones (embraced fully by the Obama administration, it must be noted), drones that were eventually intended to be self-targeting, using “life-pattern analysis” to target victims is only the most dystopian example of this emerging proposition. The erosion of democracy is quickly giving way to a kind of techno-feudalism whereby humans increasingly abdicate agency. The more we believe AI are better, or at least no worse, at making choices than humans, the harder it will be to reclaim lost power.

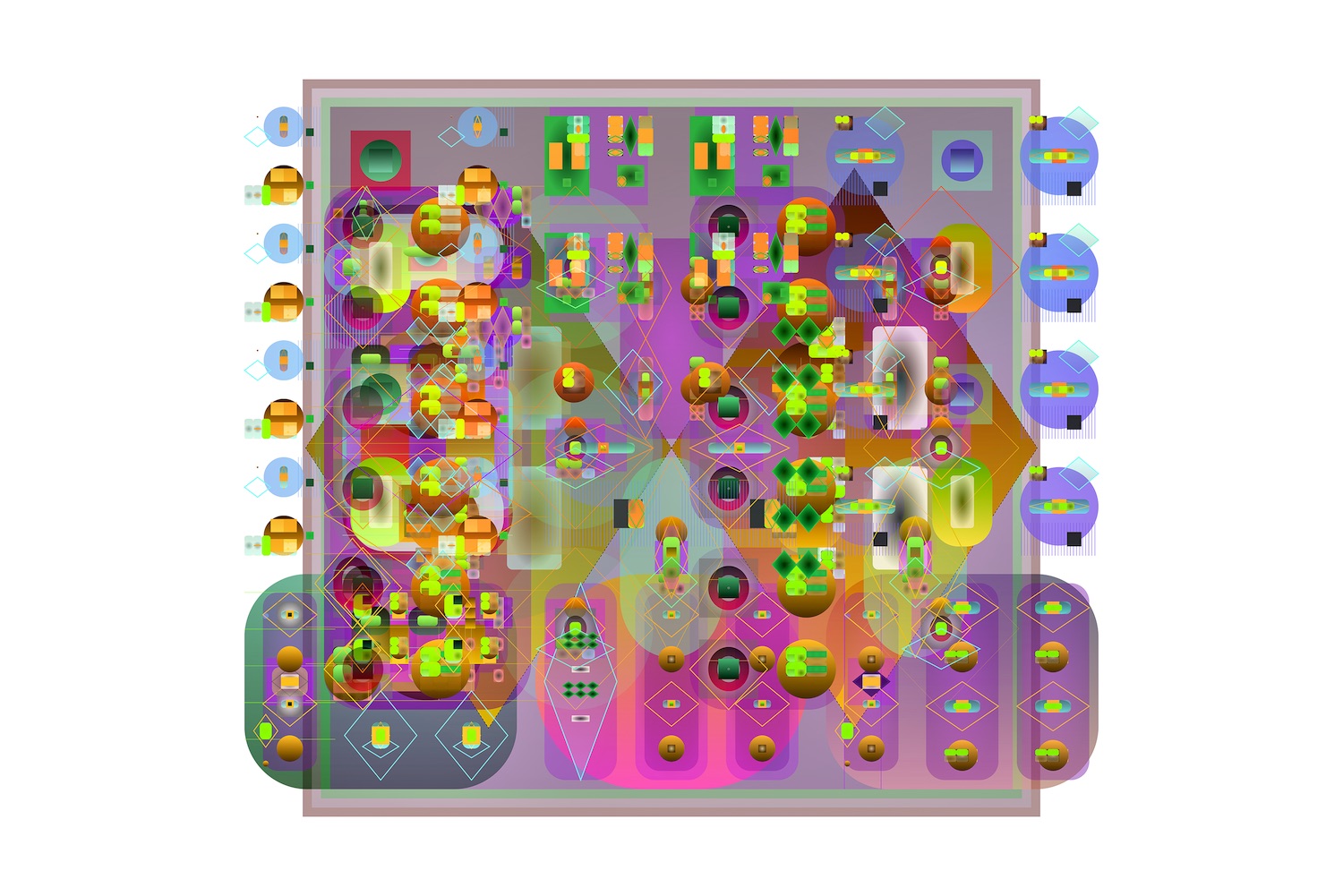

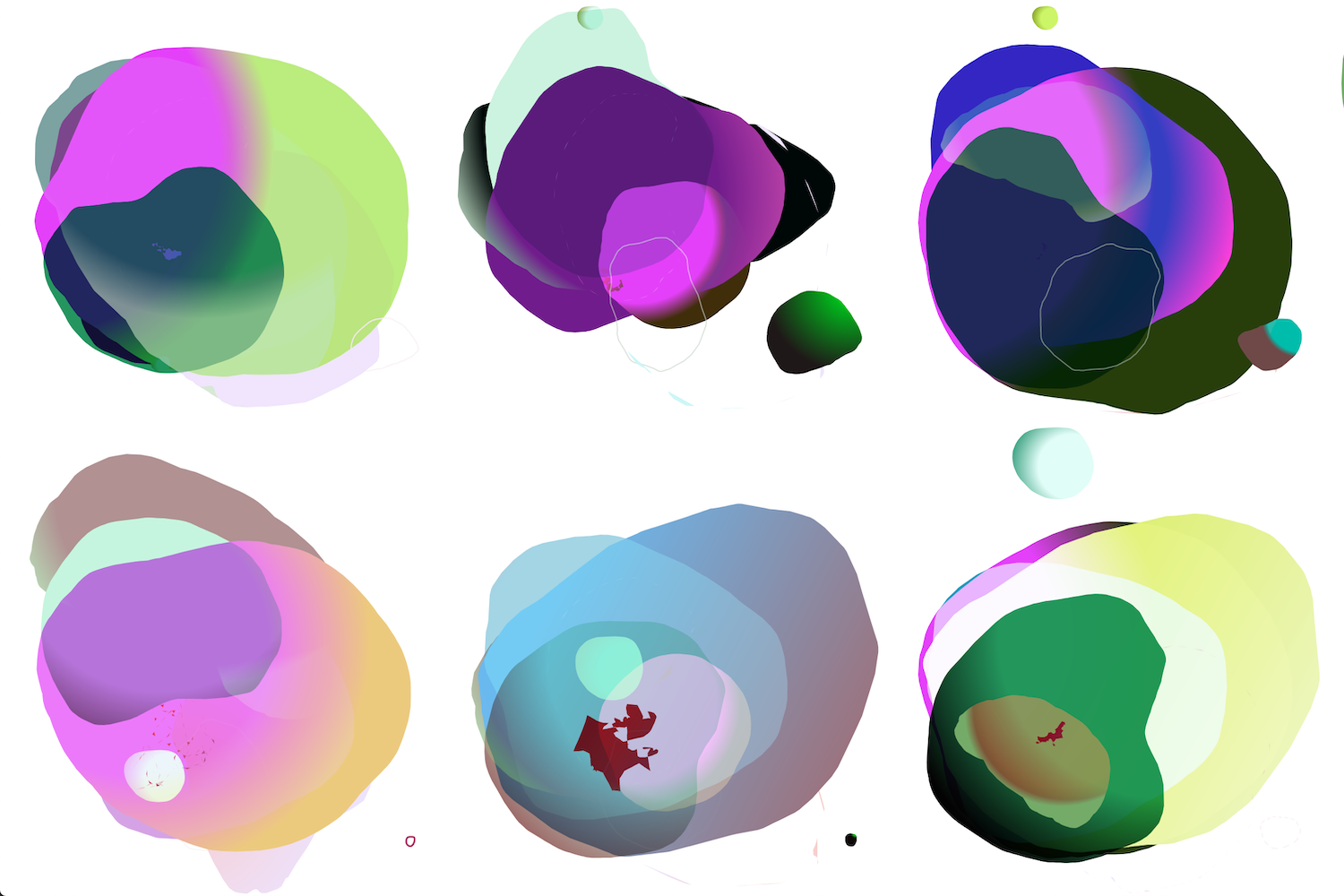

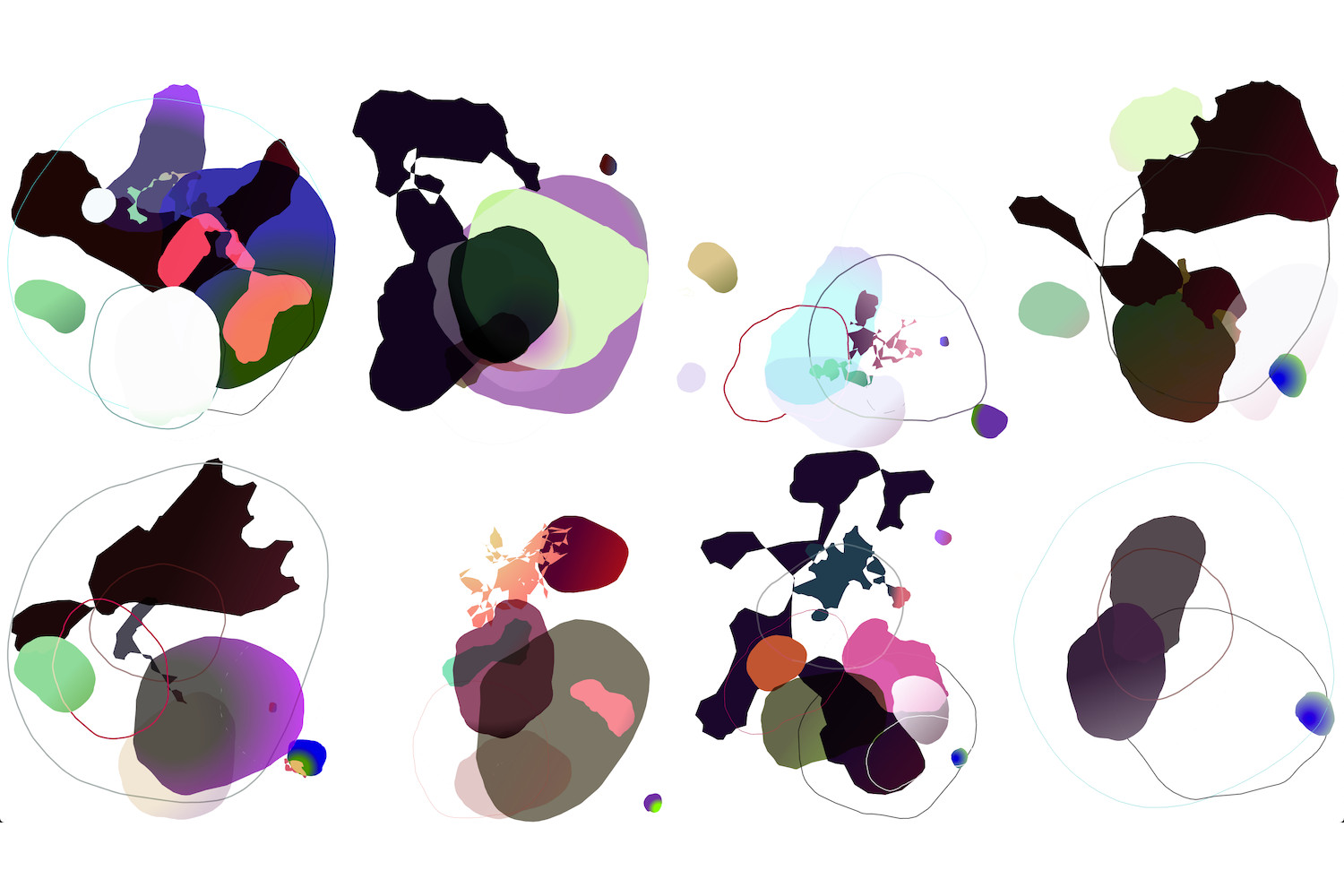

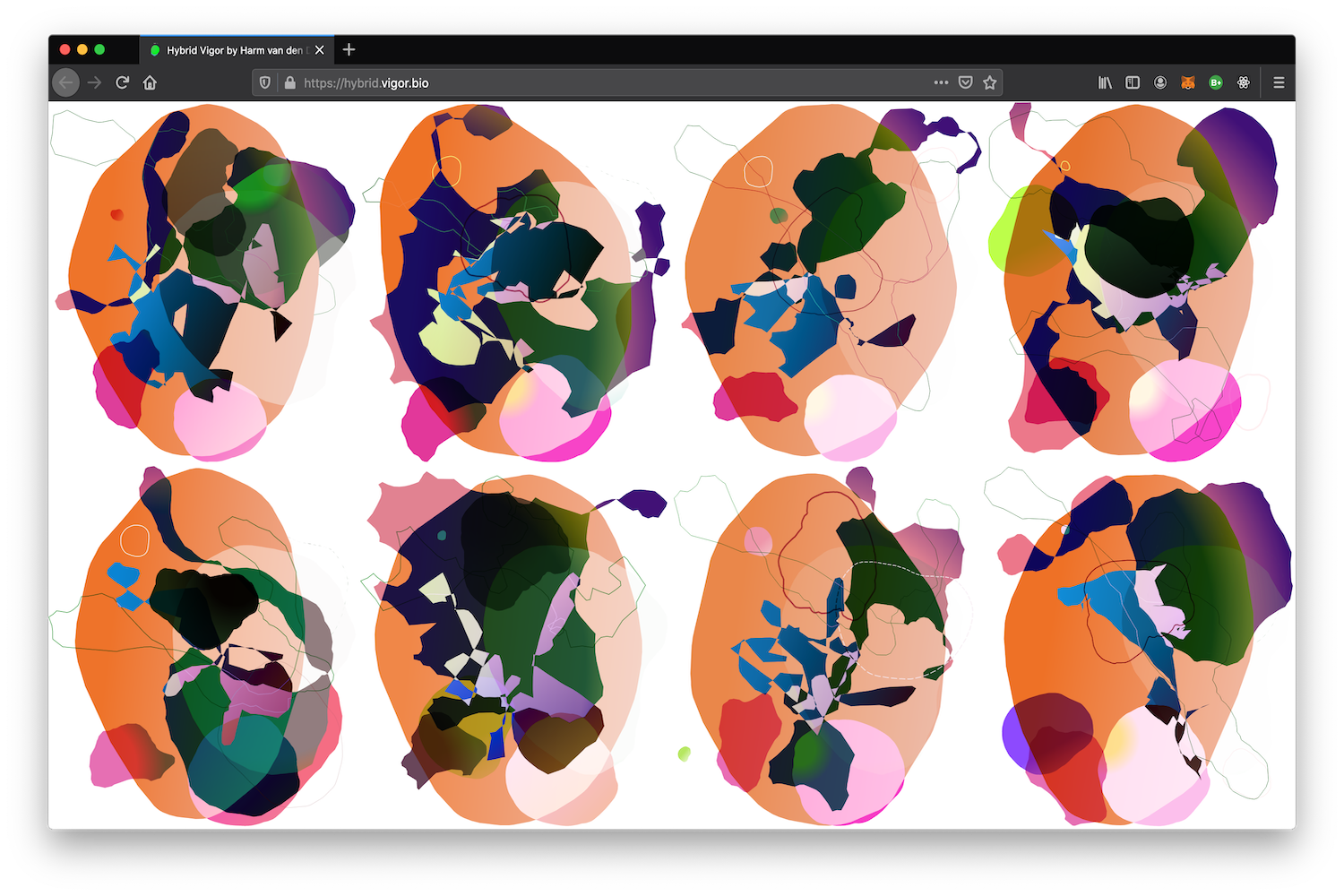

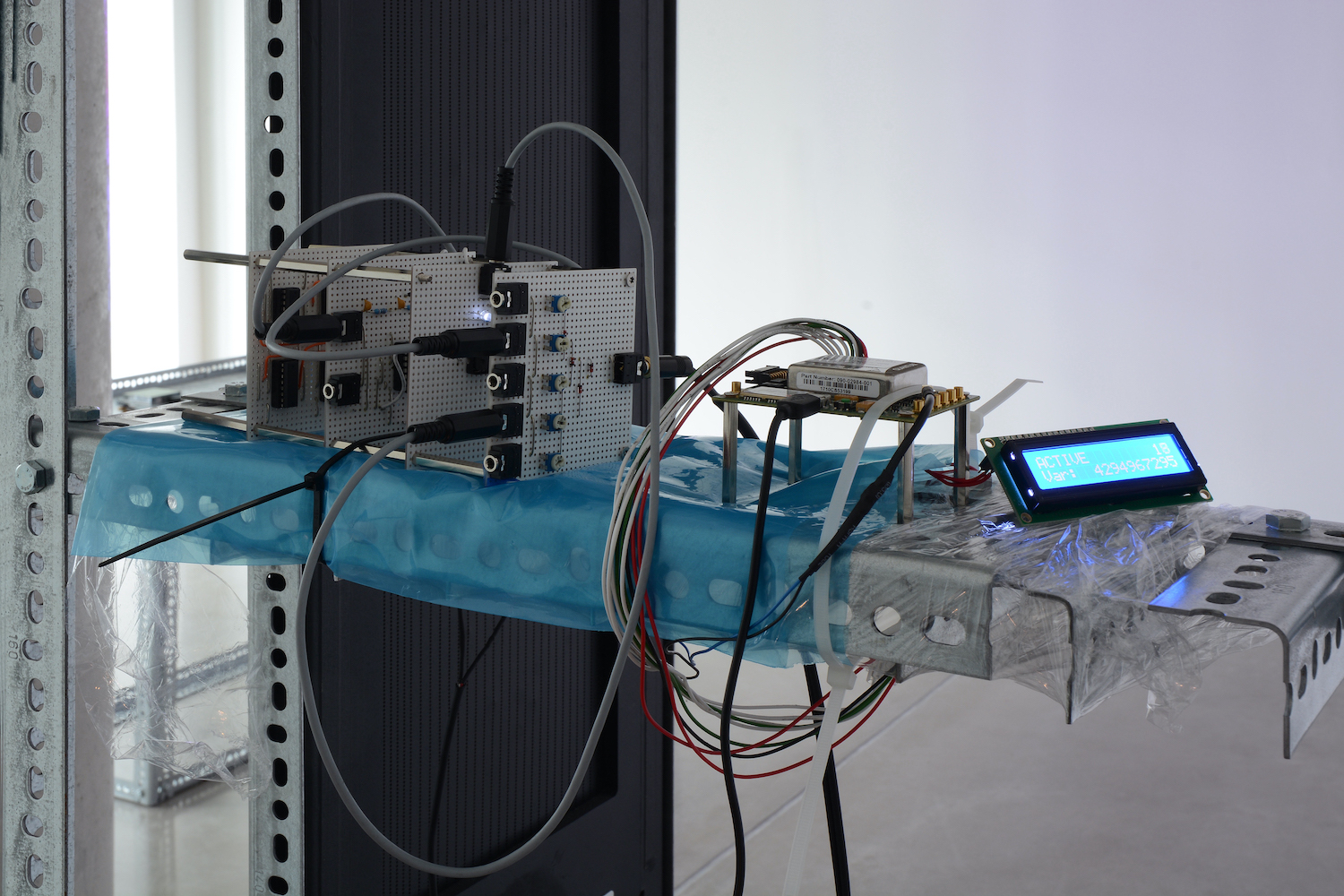

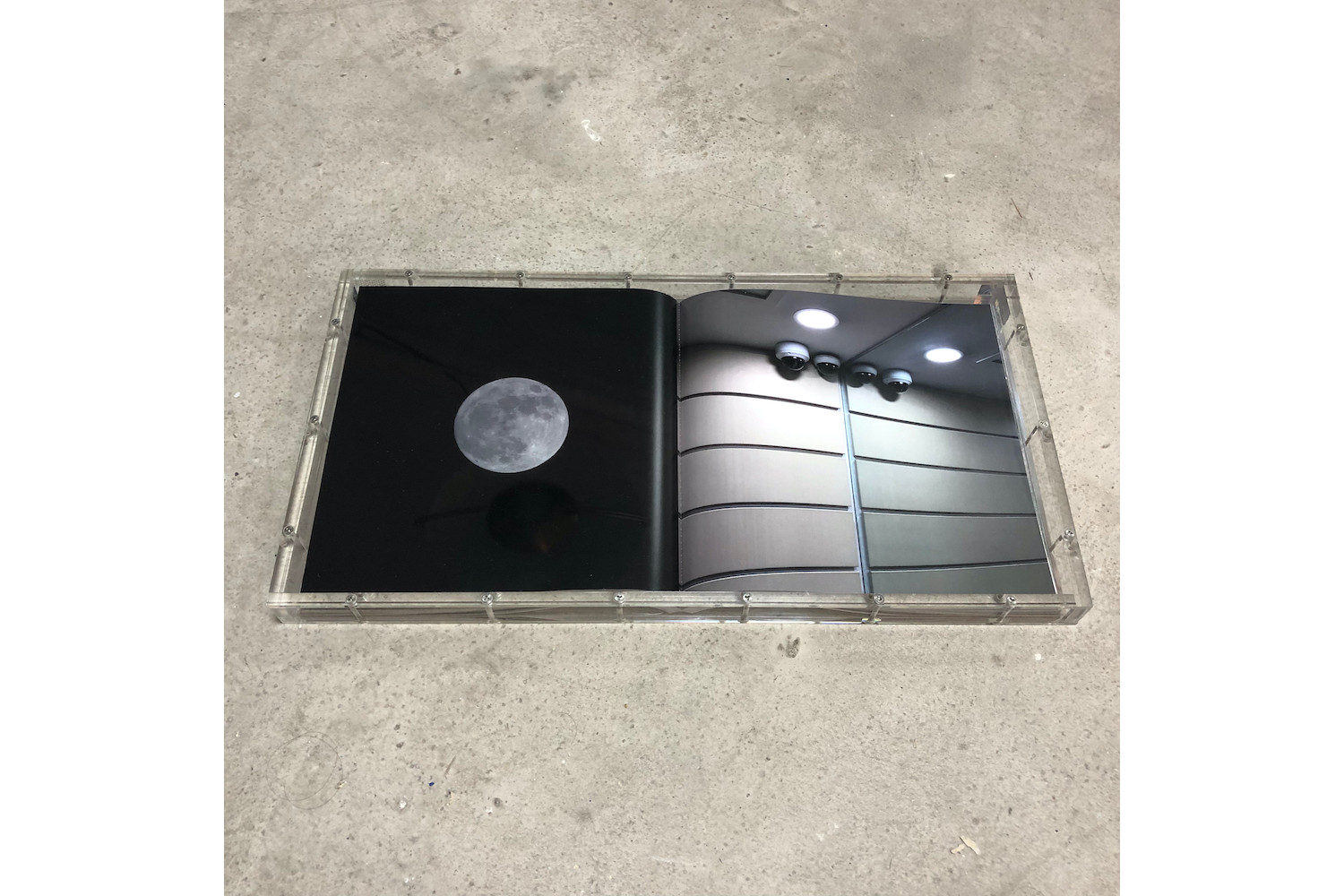

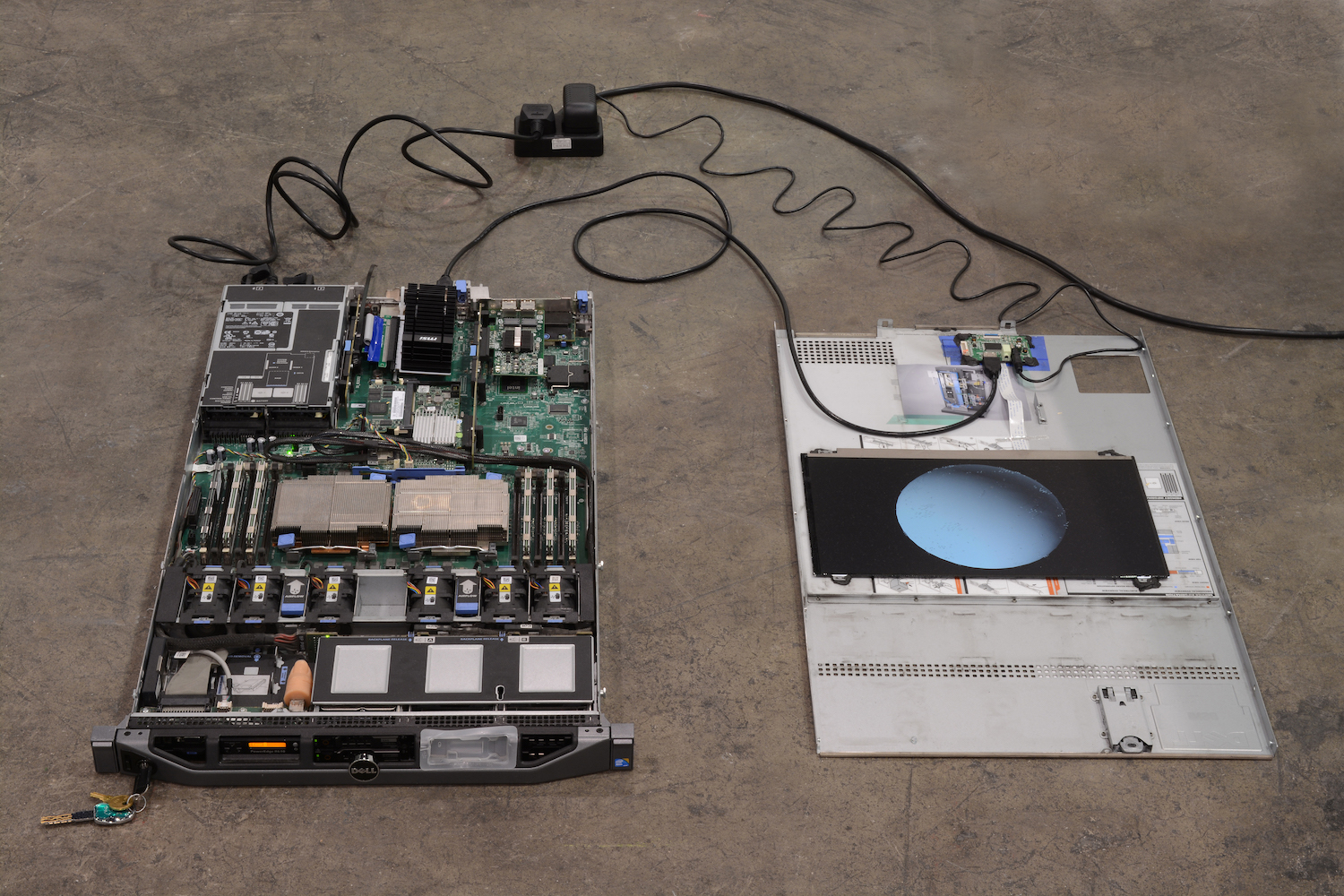

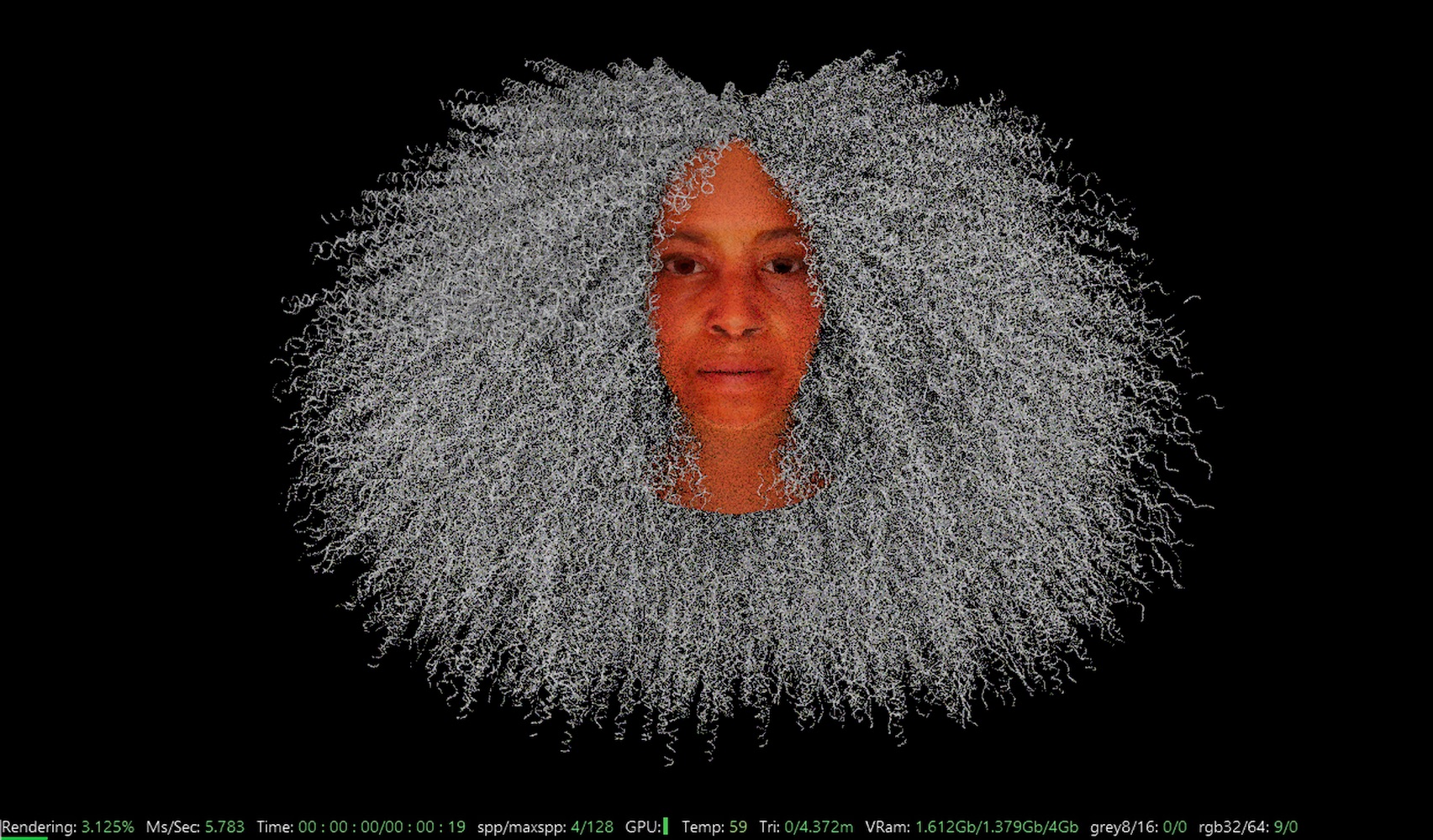

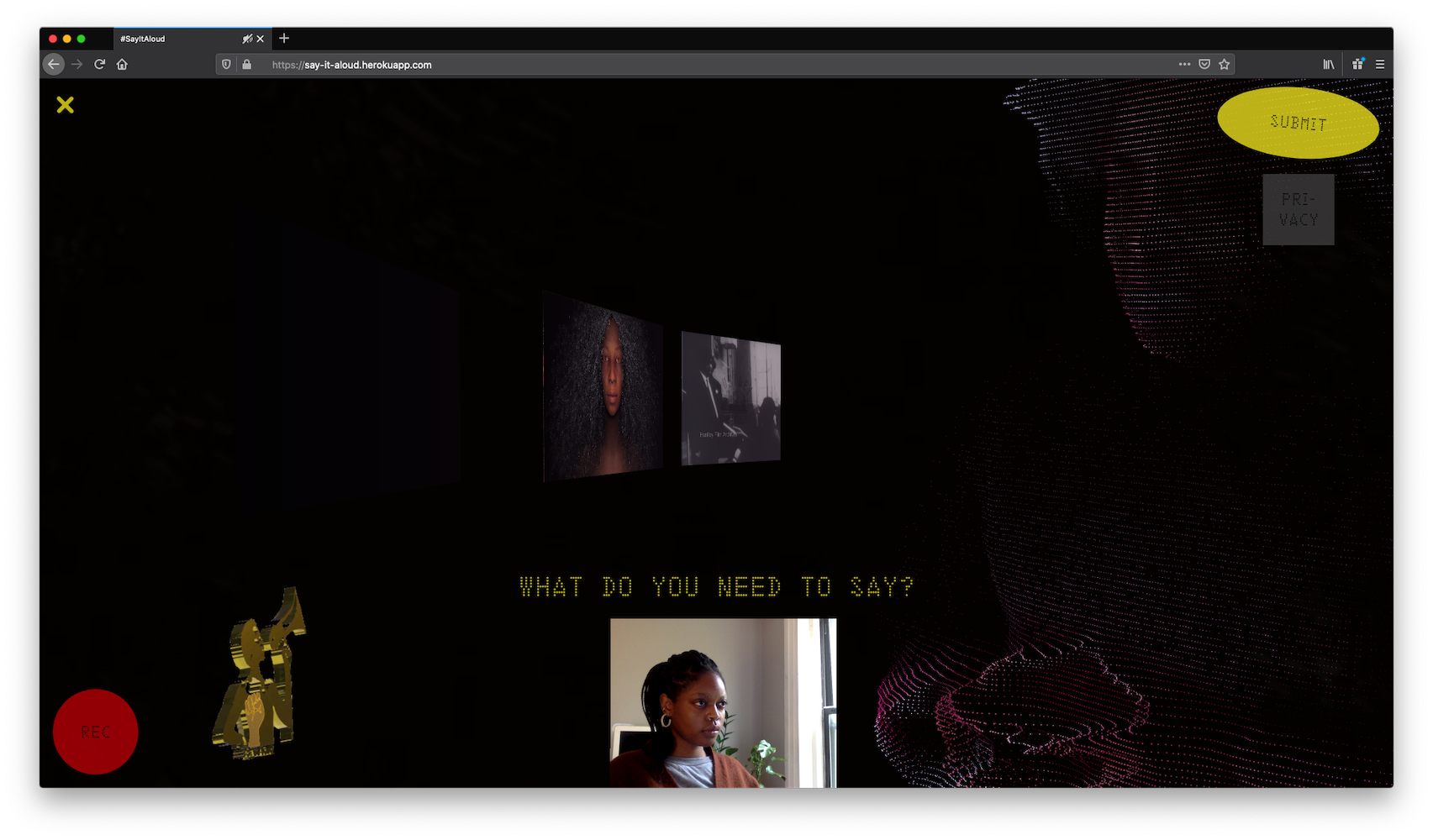

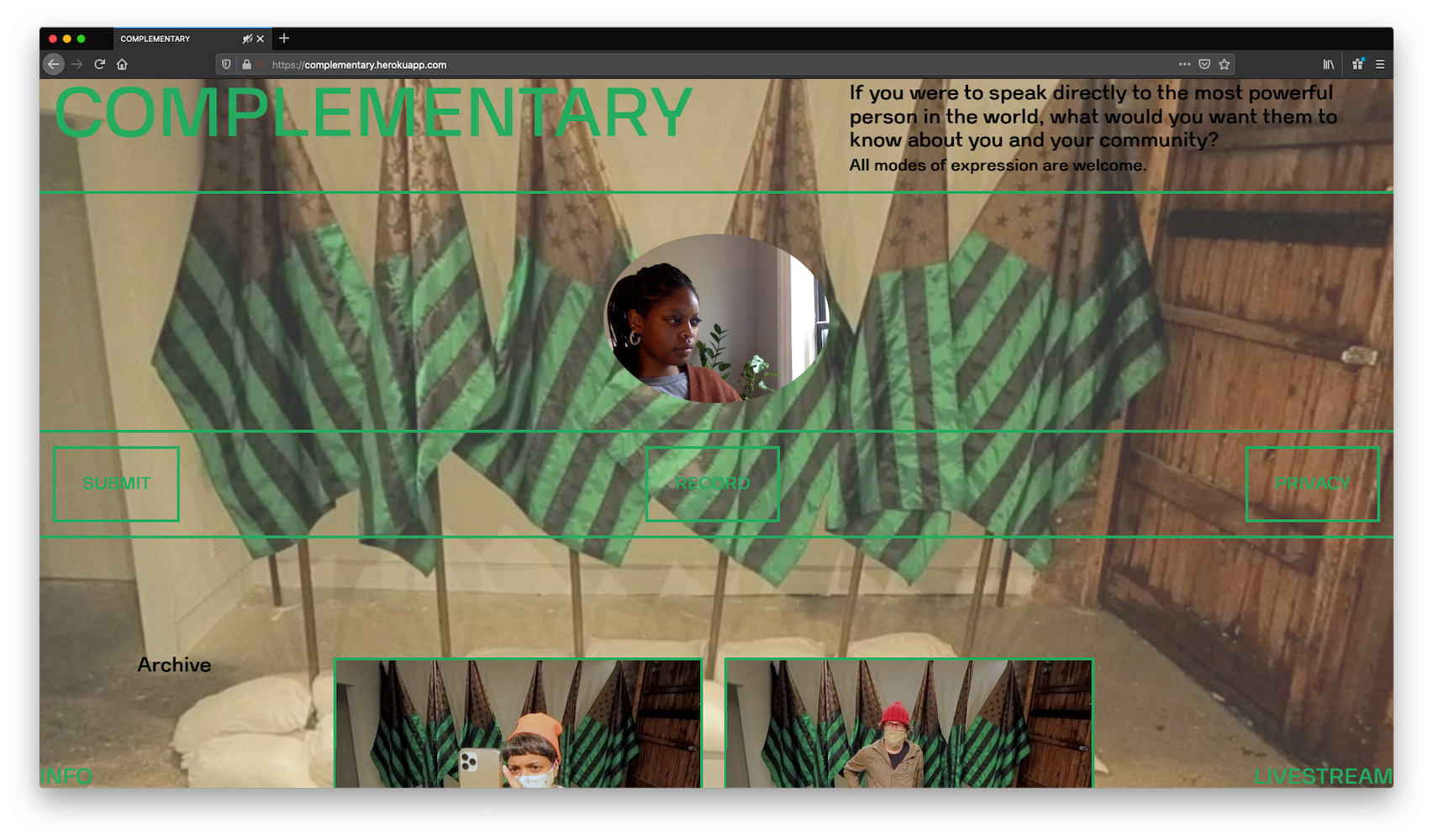

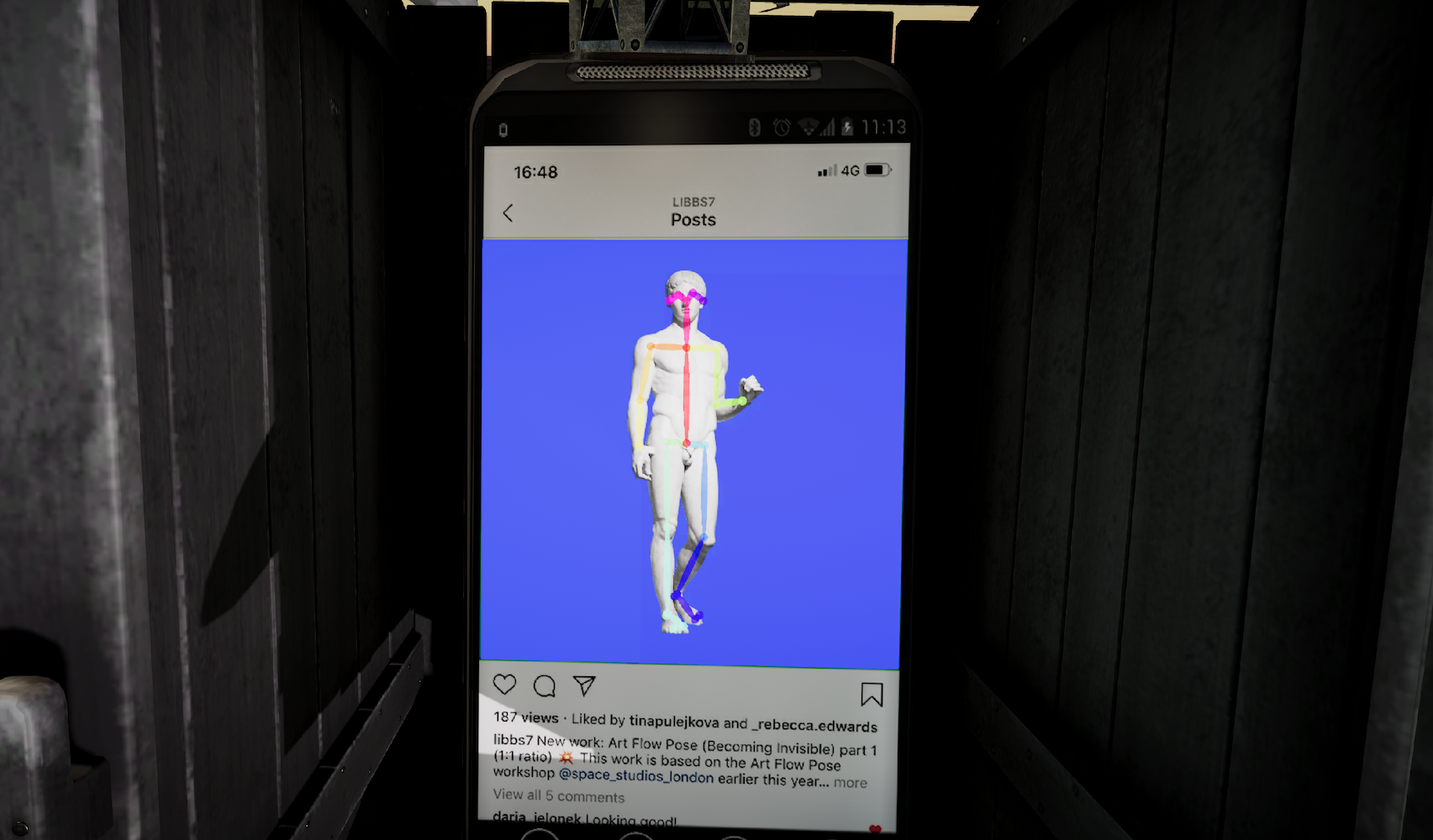

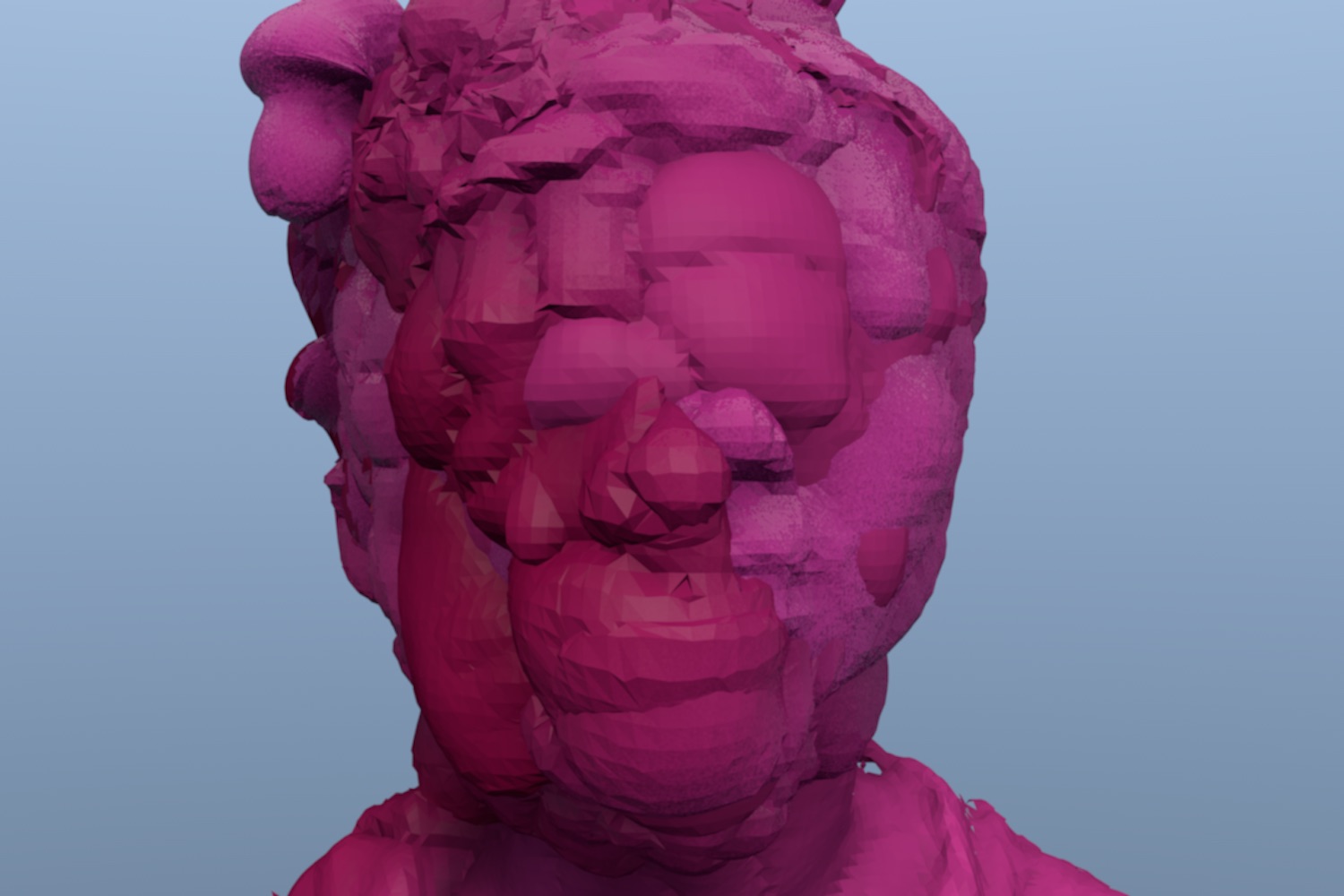

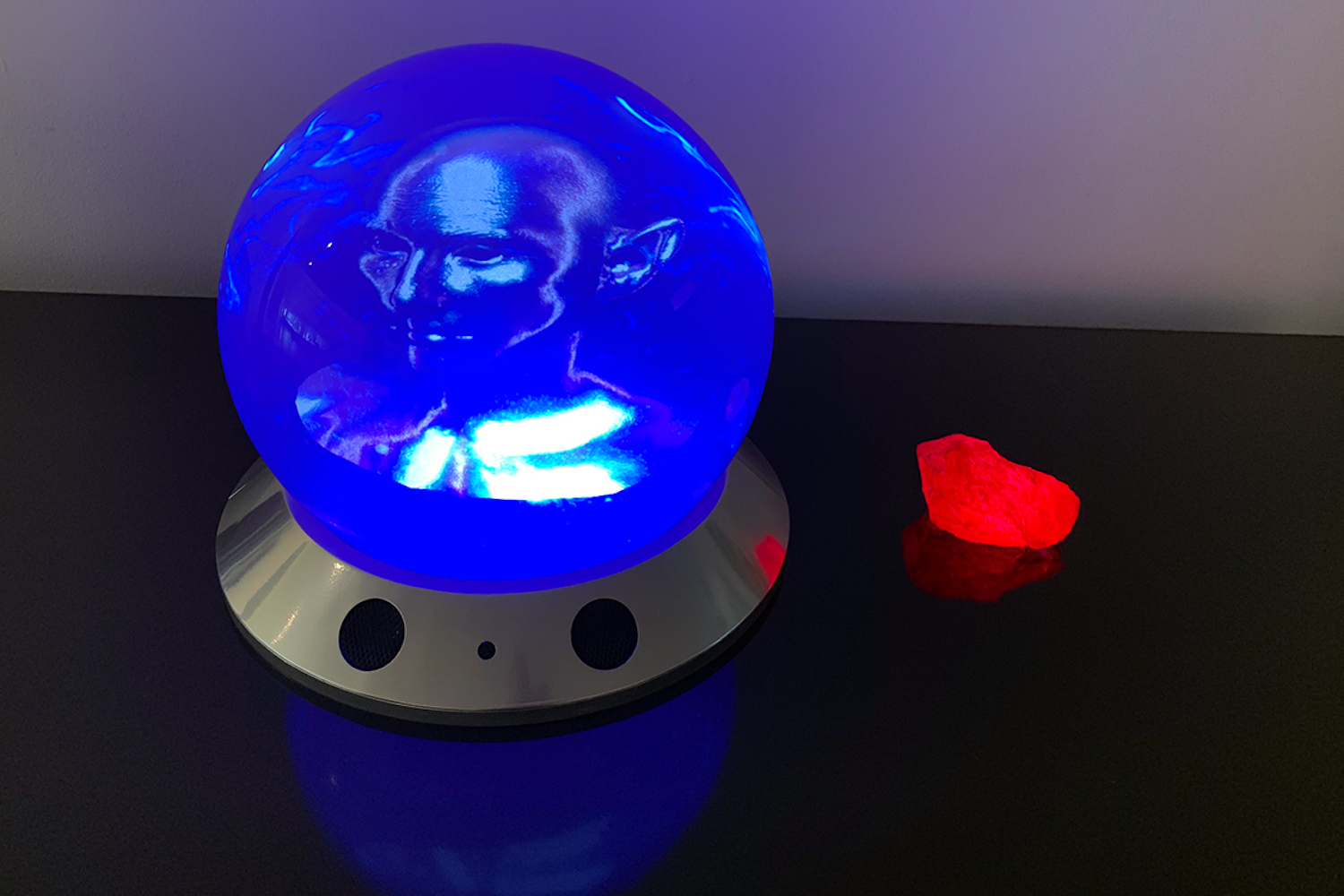

Trends relating to machine intelligence in contemporary art tend to swing from dewy-eyed optimism to the apocalyptically grievous. Certainly, such recent computer-assisted IRL nightmares as described above have moved the pendulum in the direction of the skeptics. Powerful works by Stephanie Dinkins and Joy Buolamwini have foregrounded racial inequalities residing in the white supremacist black boxes of algorithmically driven power systems. Zach Blas and James Bridle have meanwhile returned the forensic gaze of digital surveillance back on itself. Artists like Harm van den Dorpel are peering into the value systems of algorithms themselves, asking how they know what they (and we) think they know. While Libby Heaney’s interest in deepfakes has highlighted the deepest fakes of all: those perpetrated by our own willing credulity.

It is through this lens of belief that artists have made the canniest appraisals of AI. Goshka Macuga’s exhibition at Fondazione Prada, “To the Son of Man Who Ate the Scroll,” explicitly addressed the ways in which beliefs about machine intelligence quickly give way to beliefs about the vatic power of an AI “mind.” The work also helpfully draws attention to differences between “intelligence” and “knowledge” that are all too easily conflated in AI discourse. Macuga’s animatronic prophet spouts high-end Magic 8-Ballisms from real philosophical texts. Not a million miles from the GPT-3’s approach, Macuga holds a bleak mirror up to a Black Mirror society whose willingness to believe is directly proportional to the degree of power they are willing to cede. James Baldwin’s famous quotation of the spiritual, “Oh Mary, Don’t You Weep,” in the title of his searing work of social analysis The Fire Next Time (1963) hovers at the edge of Macuga’s work; this time the supposed super-brain inhabits a low-fi mannequin, and thus the absurdity of its pronouncements is easy to assimilate. But what happens when the prophet resides in the digital ether itself? Will nonbelief be an option? Will the all-consuming urge to believe breach the last of the firewalls between technology and state power? No one has the answer yet, but as the song runs, expect this old world to reel and rock.