In the original 1950s vision of artificial intelligence, the goal was to teach computers to perform a range of cognitive tasks. These included playing chess, solving mathematical problems, understanding language, recognizing the content of images, and so on. Today, AI –– especially in the form of supervised machine learning –– has become a key instrument of modern economies, employed to make them more efficient and secure: making decisions on consumer loans, filtering job applications, detecting fraud, etc.

What has been less obvious is AI’s role in our cultural lives, and its increasing automation of the aesthetic realm. Consider, for example, image culture. Just as the Instagram “Explore” screen recommends images and videos based on what we’ve previously liked, so Artsy recommends artworks we might be interested in; meanwhile all image apps are able to automatically modify captured photos according to the norms of “good photography.” Other apps “beatify” selfies. Still other apps edit raw video automatically into short films in a range of styles. (Outside image culture, the uses of AI range from music recommendations in Spotify and iTunes, amongst other music services, to the generation of characters and environments in video games.)

Does this kind of automation reduce cultural diversity over time? And does, for example, the automatic editing of users’ photos lead to the standardization of their “photo imaginations”? Might we apply AI methods to large samples of cultural data in order to quantitatively measure the diversity and variability of contemporary visual culture, as well as to track how they change over time?

AI is already automating aesthetic choices –– recommending what we should watch, listen to and wear –– as well as aesthetic production, including the realm of consumer photography. Its importance to the different genres of the culture industry — fashion, logos, music, TV commercials — is already growing, and in the future it will play an even larger part in professional cultural production. Yet, for now at least, human experts are still typically making final decisions and producing content based on ideas and media generated by computers. As a case in point, in 2016 IBM Watson was reportedly responsible for the first “AI-made movie trailer.”[i] But the process still relied on the collaboration between a computer –– used to select shots from the movie suitable for the trailer –– and a human editor, who was responsible for the editing. We will only be able to talk about a truly “AI-driven culture” when computers are creating media products from beginning to end. We are not there yet.

Today’s automation of cultural production still typically relies on a form of AI known as “supervised machine learning,” by which a computer is fed multiple similar objects –– for example movie trailers from a particular genre –– so as to gradually absorb the principles of that genre. Like art theorists, historians or film scholars, AIs analyze repeated examples drawn from a specific area of culture to ascertain their common patterns. But one crucial difference remains. A human theorist sets out explicit principles to be understood by a reader. For example, The Classical Hollywood Cinema (1985) –– a standard university textbook for film studies –– provides answers to questions such as “How does the typical Hollywood film use the techniques and storytelling forms of the film medium?”[ii] Yet the consequence of schooling AIs in many cultural phenomena is a black box. They can classify a particular film as belonging or not belonging to “classical Hollywood cinema,” but we have no idea how the computer came up with its decision.

This raises a series of questions regarding the application of AI to cultural products. Are the results of machine learning interpretable? Or is it simply a black box: efficient in production but useless for human understanding of the domain? Will the expanding use of machine learning in the creation of new cultural objects also make explicit patterns in preexisting objects? And if it does, will it be in a form comprehensible for people without degrees in computer science? Will companies deploying machine learning to generate ads, designs, images, movies, music and so on reveal their new learnings?

Fortunately, the use of AI and other computerized methods to study culture has also been pursued in the academy, where findings are published and accessible. Since the mid-2000s researchers in computer and social sciences have quantitatively analyzed patterns in contemporary culture using increasingly larger sample sizes. Some of this work has quantified the characteristics of content and aesthetics of upwards of a million artifacts at a time. Other qualitative work has built models predicting which artifacts will be popular, and how their characteristics stand to affect their popularity (or memorability, “interestingness,” “beauty,” or “creativity.”[iii])

Much of this work utilizes large samples of content shared on social networks along with data about people’s behavior on these networks. If we examine the quantitative research relating to Instagram, a search on Google Scholar for “Instagram dataset” returns 9,210 journal articles and conference papers (as of July 15, 2017). Of these results, one paper analyzed the most popular Instagram subjects and also the types of users according to which subjects they posted together.[iv] Another paper used a sample of 4.1 million Instagram photos to quantify the effect of filtering on the number of views and comments; while in another paper, researchers analyzed temporal and demographic trends in Instagram selfie content using 5.5 million Instagram portraits.[v] A recent study traced clothing styles across forty-four cities worldwide using 100 million Instagram photos.[vi]

But what about applying computational methods to analyze patterns in historical culture using the available digitized archives? The following studies are of particular interest: “Toward Automated Discovery of Artistic Influence,”[vii] “Measuring the Evolution of Contemporary Western Popular Music”[viii] and “Quicker, Faster, Darker: Changes in Hollywood Film over 75 Years.”[ix] The first paper presents a mathematical model for the evaluation of influence between artists, tested using 1710 images of paintings by sixty-six well-known figures. While some of the adjudged influences correlate with those adduced by art historians, the model also proposed other visual influences between artists. The second paper investigates changes in popular music using a dataset of 464,411 songs released between 1955 and 2010. The dataset included “a variety of popular genres, including rock, pop, hip-hop, metal, or electronic,” with the passage of time revealing an increasing “restriction of pitch transitions” and “homogenization of the timbral palette.” In other words, musical variability has decreased. The third paper analyzes gradual changes in cinematic form as exemplified by 160 feature films created between 1935 and 2010.

These and many other papers contain original and valuable insights based on computational methods that would have been impossible to arrive at through small-group ethnographic observation, let alone “armchair” theorizing. But because most of these studies adopt a statistical –– and therefore summary –– approach, they also have a common limitation. Statistical approaches that summarize collections of cultural artifacts — such as datasets of information regarding cultural behaviors (for instance, sharing, liking or commenting on particular images on social networks) — in order to find patterns or propose relationships will not apply to everything in the dataset.

For science, a statistical model that only works sometimes is a problem because sciences assume that such a model should accurately convey the characteristics of a phenomenon. However, we can use computational methods to study culture without such assumptions, and this is a part of the alternative research paradigm that I call “Cultural Analytics.”[x] According to this paradigm, we do not seek to “explain” most or even some of the data using a simple mathematical model, treating the rest as “error” or “noise” simply because our mathematical model cannot account for it. We should not assume that cultural variation is a deviation from a mean, nor that large proportions of works in a particular medium or genre follow a single or limited number of patterns, such as the “hero’s journey,” “golden ratio” or “binary oppositions.”

Instead, I believe that we can study cultural diversity without assuming that it is caused by variations from some types or structures. Does this mean that we are only interested in the differences and that we wish to avoid any kind of reduction at all cost? To postulate the existence of cultural patterns is to accept that through analyzing data we are making at least some reduction. But despite wanting to discover repeating patterns in cultural data, we should always remember that they only account for some aspects of the artifacts and their reception.

Every expression and interaction is unique, unless it is a 100% copy of another cultural artifact, or produced mechanically or algorithmically to be identical with others. In some cases, this uniqueness is not important in analysis, and in other cases it is. For example, characteristics of faces we extracted automatically from a dataset of Instagram self-portraits revealed interesting differences in how people represent themselves in the medium in particular cities and periods.[xi] The reason we do not tire of looking at endless faces and bodies when we browse Instagram is that each is unique. We are fascinated not by repeating patterns but by unique details and their combinations.

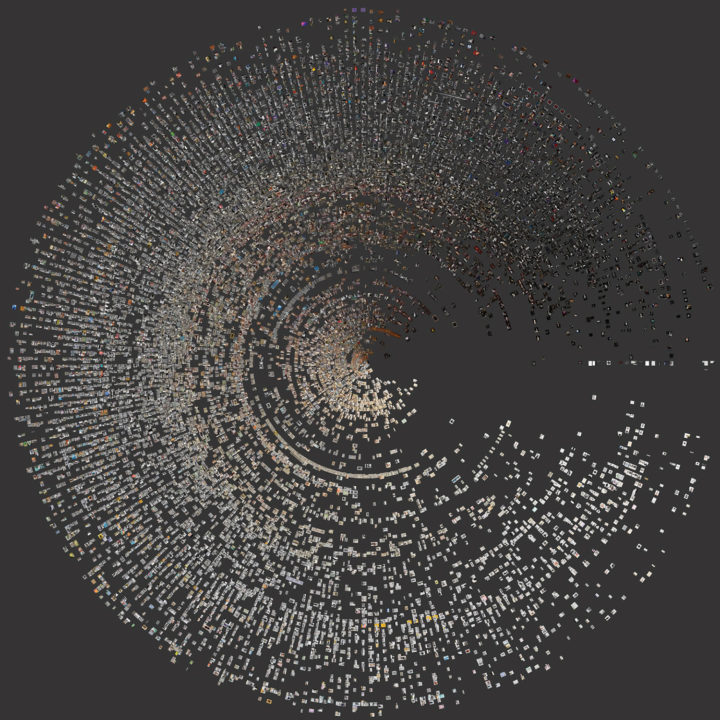

The ultimate goal of Cultural Analytics should be to map and understand in detail the diversity of contemporary professional and user-generated cultural artifacts created globally — i.e., to focus on the differences between visual artifacts, not simply on what they share. During the nineteenth and twentieth centuries the lack of appropriate technologies to store, organize and compare large cultural datasets contributed to the prevalence of reductive cultural theories. Today, a single computer can map and visualize thousands of differences between tens of millions of objects. We no longer have an excuse to restrict our focus to what cultural artifacts and behaviors share, which is what we do when we categorize them or perceive them as instances of general types. And while we may need to begin, due to issues of scale, by extracting patterns in order to draw our initial maps of contemporary cultural production and dynamics, eventually those patterns will recede into the background as we focus more and more on the differences between individual objects. This, to me, is the ultimate promise of using AI methods to study culture.