In March 2016 a rare board game event broke into the mainstream media: a computer had beaten a human Go master. Lee Sedol, a nine-dan wizard in the ancient Chinese board game, had lost in Seoul to AlphaGo, a computer program produced by DeepMind, a subsidiary of Google. To many, this was seen as a first salvo in what would become a Terminator-style war with the machines: Go is a game thousands of times more complex than chess, so much so that beginners often have a hard time determining when a game has come to an end, let alone who has won. If the machines could master the fine subtleties of Go, few human cognitive tasks would avoid their grasp. The optimists, in publications like Wired, expanded on how, by finding novel moves (the now infamous “move thirty-seven”) that centuries of calcified human habit had ignored, AlphaGo had opened up new techniques and avenues in human Go playing. Many of the humans who played against AlphaGo went on to be unbeaten against other humans, seemingly enlightened by their encounter with the mechanical intelligence. But some took a dimmer view.

Word of AlphaGo’s victory came amid a flurry of AI-related news and speculation: not only would “they” be driving our cars, but they would be running our social programs, and ultimately would steal all our clerical and professional jobs. And that would just be the beginning! Once we’d been made obsolete at work, they would begin on art, making us obsolete in our leisure time as well. Suddenly, these mysterious entities are among us, and we talk about them in almost the same reverent tones as our medieval forbears discussed the powers of spirits, etheric influences and humors. A neural network employed by Cambridge Analytica helped Trump to win the election; the AI in self-driving cars will soon make decisions in dangerous driving scenarios about who lives and who dies; an AI made a weird puppy slug image! The average person’s fundamental understanding would not change if we had attributed all those effects to witchcraft. Unlike witches, however, AIs seem possessed of a febrile innocence — they are fragile infants on the cusp of developing their terrible potential.

Yet, ultimately, are we not just looking at the most recent in a long chain of algorithmic human thinking that aims at helping us avoid making choices with our own moral or aesthetic compasses, using systematized decision-making instead? Are the consequences of one system so different from another? Haven’t we lived through a century or so of artworks, music and social and financial policy that has been produced through algorithms already? Why panic?

For the sake of argument, let’s take Arnold Schoenberg’s 1921 systematization of the use of all twelve tones of the Western chromatic scale as a starting point (conceivably we could go back to Bach). By mathematically ensuring that all twelve tones were in equal use (a typical major or minor scale emphasizes only eight of the twelve possible tones), Schoenberg invented a method of composition with no suggestion of a tonal center, no tones weighted over others. Not only did the twelve-tone technique fit in with the prevailing direction of Western music at the time toward intensified dissonance and chromaticism, it also caught a sudden trade wind in Western art by shifting the weight of concern toward the conceptual: once the composer devises the system, a composition could practically set itself in motion.

Schoenberg’s algorithmic approach to composition brought on an explosion of creativity. Composers worked frantically to apply serial techniques to all aspects of a work of music; twelve-tone rows were converted through complex mathematical processes into 144 square matrices that could, when read along multiple axes, generate entire works. At its worst, integral serialism (as this near-total replacement of the composer’s will with math was sometimes called) is like listening to someone designing a crossword puzzle. But in works by Karlheinz Stockhausen and Pierre Boulez you can see an earnest desire to escape from the boundaries of music as they found it. Like Lee Sedol reacting to the shock of move thirty-seven, there’s a sense that, by letting the algorithm of integral serialism grind up the material of Western music, these composers were encountering new alien sonorities and discovering uncharted musical waters. Stockhausen in particular exploded serial techniques by applying them to electronics and tape editing, combining audio recordings of music that had been edited and spliced according to serial techniques with purely electronic sounds, completely divorced from any systems of intonation or tuning.

With the outbreak of World War II, Schoenberg went into exile in Los Angeles, where he continued to compose and to teach, both at USC and UCLA. And it was from this period of Schoenberg’s life that his serial ideas were to take their most fruitful form, through their brief but potent impact on one of his students: John Cage. Cage’s development of serial ideas was drastic; he introduced chance and indeterminacy into the algorithmic systems, fundamentally systematizing many of the conceptual aspects of composition as well. This meant that his chance-based compositions changed from performance to performance, while other elements stayed the same. This vague congruence between individual manifestations of Cage works is where we begin to see the modern shape of today’s neural networks.

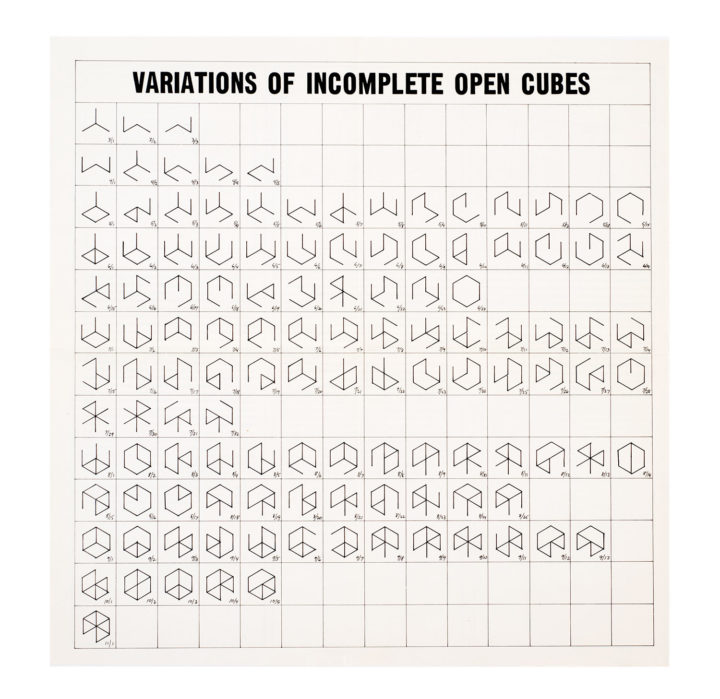

Perhaps the level of discomfort many feel when facing the results of more recent neural networks originates in the fact that we are now able to serialize “feel” — which is to say that neural networks are much better at understanding vague categories than previous systems. So a network might recognize “catness” in a series of images. It can identify key features that we, socially, hold to determine whether a line drawing is or is not, on the spectrum of representation, in some sense “catlike.” In a way that is oddly reminiscent of integral serialism, neural networks do this by creating matrices of the likelihoods of certain features occurring and when they are most likely to occur, and their abilities to synthesize and use this information increases based on the quality and robustness of the data that they are trained with. In the case of Quick, Draw!, for example, an online game developed by Google to be “the world’s largest doodling dataset,” people are asked to quickly draw specific simple nouns, and the software collects not only the images themselves but also the order in which each stroke is made. By weighting what strokes are made initially, and by evaluating where and how those strokes are similar, the network learns what we think is important to the concept at hand, be it “bed” or “cat” or anything else. The software can in turn generate hundreds of images that it thinks might match our criteria and ask us to evaluate these for accuracy.

Often, otherwise sober media sources promulgate the witchcraft aspect of AI by telling us that even the scientists creating the software don’t know what it is doing when it’s doing it. What this means is that from the software itself and the inputs provided one cannot predict what the specific results will be without actually running the program. In honesty this is no different than the process at work in Terry Riley’s landmark minimalist (and very post-Cagean) work In C (1964), in which directions in the score make it more likely that some musical formations will occur than others, but broadly speaking one can never predict from the score what exactly the musicians will be playing at a given point in time. The score works by presenting an undefined group of musicians with a series of fifty-two short riffs to play. Whereas all players begin with the first riff, when they move on to the second and third riffs depends on them. Further, performers are invited to shift their rhythmic emphasis to create more or less interesting interactions among themselves. So while all performances of In C that this author has heard have been identifiable as such, with specific motifs becoming apparent and signaling that this is indeed the piece in question, none have been identical.

Of course In C was just one of many works employing indeterminacy and systems together. From the work of Robert Smithson, Mel Bochner, Steve Reich, Charles Gaines and Lee Lozano to that of anthropologists like Gregory Bateson and former secretary of defense Robert McNamara, systems had taken hold of everything by the mid-1960s. And whether they were generating OULIPO literature, happenings in the East Village or bombing campaigns in Vietnam, the form has remained unchanged from then until now; the only difference is the amount of quantitative information the systems are able to metabolize.

When Tesla CEO Elon Musk recently begged the governors of fifty American states to be wary of AI, using the hypothetical example of a stock-market trading robot launching a missile strike to boost its stock portfolio, the tech press exploded with derision. The kind of General Artificial Intelligence (AGI) that is capable of making lateral leaps from managing a stock portfolio to controlling defense assets was a straw man — or straw robot — they sneered. What was needed now, argued a chorus of AI industry professionals, was a legal and ethical framework for AI in its current state, what people call “stupid” AI, not legislation against AGI. Existing systems were being abused, perhaps; humans were using them to deliberately produce negative outcomes or cheat existing regulations. What these insiders were indicating was that, like all deployments of algorithmic thinking so far, behind the technical facade is the face of the person creating the system and the hand of the person using it. These are very human-made things, and they show their human origin everywhere.

There is a feeling that the understanding of human culture that neural-network computing is able to develop is uncanny and unnerving, but this is only true to the extent that the data training the neural networks are accurate. So there’s a third component here: not just the people writing and deploying AI software, but also us, who provide every “like,” “follow” and comment that provides the data for these systems to better understand and map our culture. When the robot asks us to draw a cat, why do we try and do it as accurately as possible? Probably this is a holdover: we want the approval, we want the AI to like us and tell us that this is a good cat drawing, we want to be understood. The data we are providing to these machines is our most obliging, our most helpful and most cross-indexed quantified information. As long as the AIs are being deployed for goal-oriented objectives, we are still just confronting the Wizard of Oz, a man behind a curtain pulling the levers and deciding how all this information we so gladly handed over will be used.

Maybe what really lies behind our desire to provide so much information to systems we understand are controlled by others, largely for the benefit of others, is that we are anxious to contribute to the project of general, rather than dumb, AI. What we want is for these machines to be more meaningful; we want them to challenge our understanding of what it is to be a mind. We want an excuse to think about something hard instead of about something stupid, and in desperation this is our culture’s only chance to think about anything larger on a vast social scale.

Perhaps it goes without saying, but serialism in music was wildly unpopular. Even though most serial works were brief, concert-going audiences and the music-listening public at large stayed away. In his 1958 essay “Who Cares if You Listen?,” harsh serialist Milton Babbitt makes the case that composers are largely working for each other and that popular music and “serious” music had always been different things, and that by mathematically verifying the pursuit of all of music’s corners, advanced academic music would eventually change all music. Today, popular music is largely produced by electronic means, deploying and manipulating source material in ways that owe a technical debt to the work of mid-century academic composers. Babbitt was, maybe despite himself, totally right about how things would develop. He was also right about the chances that the music he and his friends composed would win over the listening public: it would be hard to find a Babbitt work in performance anywhere outside of a university today. Similarly, dumb AI today seems to hold relatively few options for creative work; at best we can use the machines to pursue the outer reaches of specific cultural systems (if we have the computing resources to usefully train them) to find some version of “move thirty-seven.” But it’s hard to see a terribly bright future in the creative fields since our own human work has been so incremental. Do we even need computers to dig our own grave here? In the wake of Zombie Formalism, is there any need for scientists to slave away on “style transfer” algorithms, capable of mapping one artist’s style onto another’s? Any trip to New York just reveals the tireless industry of thousands of young painters already actively performing this task. Surely we have already crowd sourced style transfer. When living artists invest their own life-energy into odious process-based abstractions, it’s obvious why we, as a culture, are crying out to interact with a general AI. Imagine a studio visit starting the way Arnold Schwarzenegger does at the beginning of Terminator 2 — “I need your clothes, your boots and your motorcycle” — instead of with the usual earnest, “thanks for dropping by.” Legendary human creativity has done a good enough job of becoming dumb AI. In our cultural basement, we’re dying for some decent company, itching to feel those boundaries pushed.